Technical SEO

What is XML Sitemap: how to generate it and send it to Google

What type of XML sitemap is right for you and for your site? Find out by reading this sitemap guide!...

SEO Tester Online

January 24, 2020

Guide to file Robots.txt: what it is and why it is so important

How do you create a Robots.txt file and where to insert it? Find out in this short article!...

SEO Tester Online

December 13, 2019

How to create SEO-friendly URLs

In SEO it's the details that make the difference. Read the article to understand how to make your UR...

SEO Tester Online

December 13, 2019

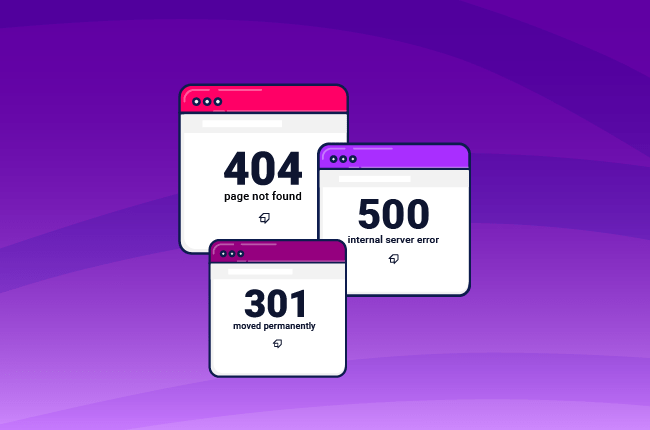

Guide to HTTP Status Codes

What are the HTTP status codes to check and optimize to improve your site's SEO? Read the guide!...

SEO Tester Online

December 12, 2019