What is crawling and why is it crucial for SEO?

To understand SEO and its dynamics, it is crucial knowing how a search engine analyzes and organizes the pieces of information it collects.

One of the fundamental processes that make search engines to index content is the so-called crawling. By this term, we mean the work the bot (also called spider) does when it scans a webpage.

How crawling works

The search engines use crawling to access, discover, and scan pages around the web.

When they explore a website, they visit all the links contained in it and follow the instructions included in the robots.txt file. In this file, you can find the directions for the search engine on how it should “crawl” the website.

Through the robots.txt file, we can suggest the search engine to ignore particular resources within our website. Through the sitemap (i.e., the list of the site URLs), instead, we can help the crawler navigate our website, providing it with a map of its resources.

Crawlers use algorithms to establish the frequency with which they scan a specific page and how many pages of the website it must scan.

These algorithms help crawlers to tell a frequently updated page from one that doesn’t change over time: the crawler would scan the first one more regularly. A key concept, from this point of view, is the crawl budget.

Crawling of images, audio and video files

Usually, search engines don’t scan and index every URL address that they meet on their way.

We are not saying that crawlers are not capable of interpreting content other than text files. They are, and they’re getting better at it. Anyway, it’s better to use filenames and metadata to help search engines reading, indexing, and ranking the content on the SERP.

Crawlers on links, sitemaps, and submit pages

Crawlers discover new pages by scanning the already-existing ones and extracting the links to other pages.

The crawler adds the addresses to the yet-to-be-analyzed file list and, then, the bot will download them.

In this process, search engines will always find new webpages that, in their turn, will link to other pages.

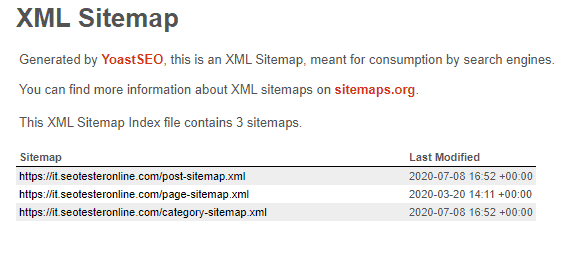

Another way search engines have to find new pages is to scan sitemaps. As we said before, a sitemap is a list of scannable URLs.

A third way is to send the URLs to search engines manually. It is a solution for telling Google that we have new content without waiting for the next scheduled scan.

We can ask for a scan via Google Search Console.

However, you should resort to it only when you want to make Google scan several pages (imagine submitting one page at the time). Conversely, Google prefers XML sitemaps for large URL volumes.

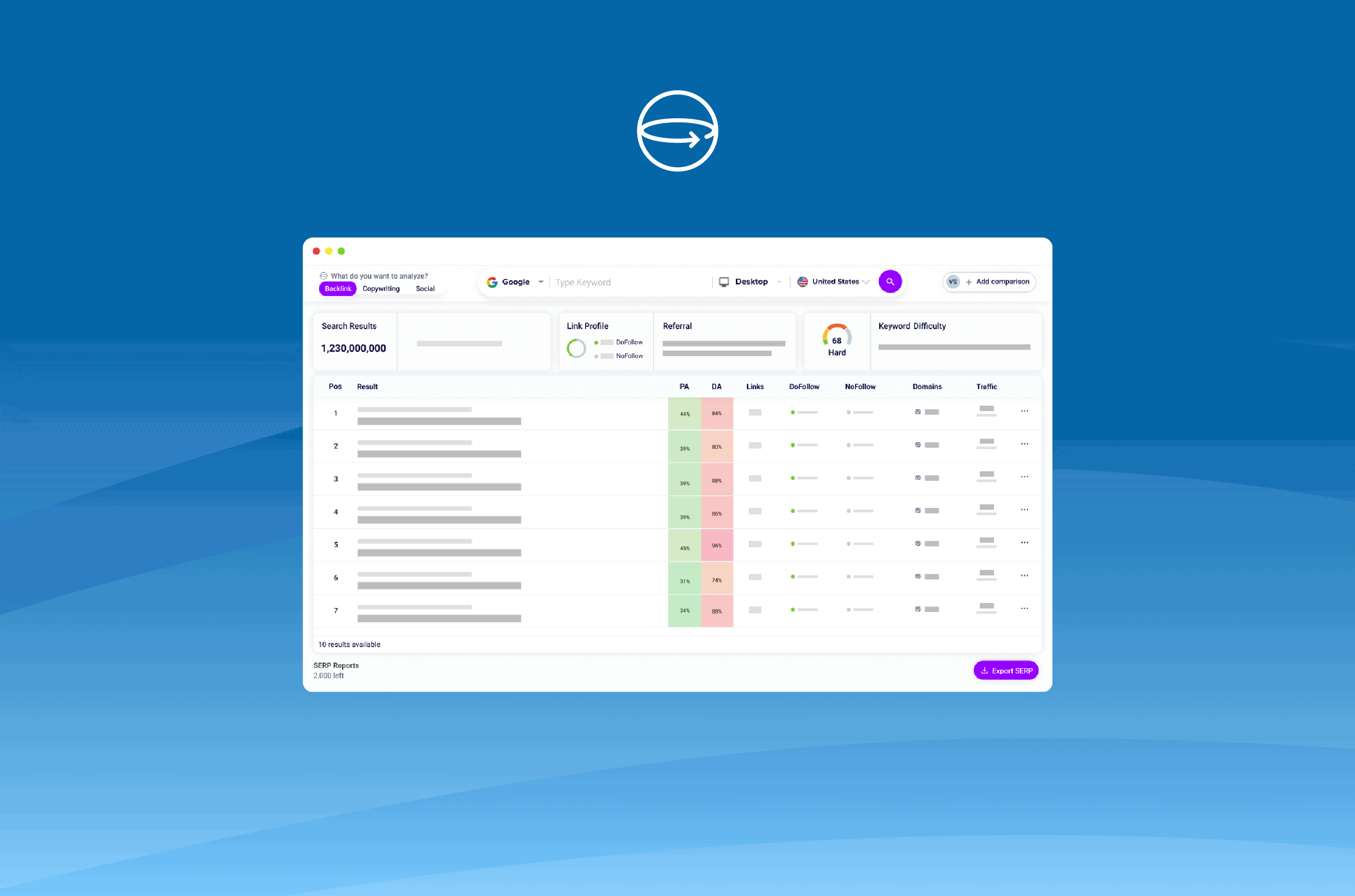

How do Search Engines work?

For sure, Search Engines are fascinating. Their algorithms are more and more complex each day and it’s not easy (sometimes even impossible) to fully understand how they work.

If you want to know more, we suggest you read our article about the Crawling, Indexing and Ranking phases of Search Engines.